I've always thought that conversational A.I. might be an interesting tool for narrative storytelling. After all, what is a dialog between a bot and a user but a collaborative story? The conversations we design all have a three act structure: a beginning, middle, and end (or a setup, conflict, and resolution).

Just look at text-based adventure games: these are interactive stories with an interface between a bot and a user, and they have essentially existed since computers became mainstream.

So I began thinking—what sort of story can I tell through conversational A.I.?

I write short-form horror as a hobby, so it was a natural conclusion for me to envision a horror-based experience with a creepy chatbot that would be antagonistic towards the user.

And, well, that's how this all began.

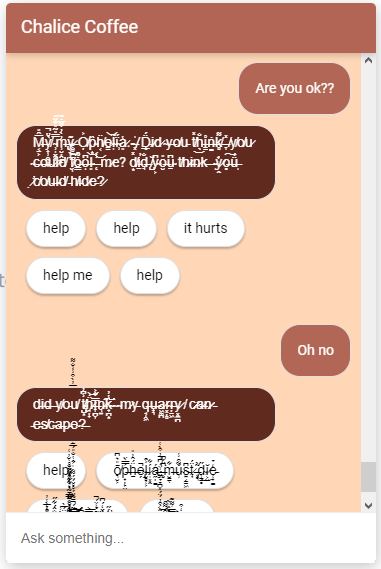

Chalice Coffee is a café with a chatbot that seems innocuous, but has a sinister underside.

A typical reader will read my short story, which provides the situation: there's a mysterious website out there, contacting people and asking them to order coffee. A user who orders a coffee from the site slowly feels a creeping sense of dread, is unable to sleep, and when they awake the next morning they find the coffee they ordered on their kitchen countertop... and the urge to drink it becomes irresistible (what happens when you drink it is up for the reader to interpret).

So someone who reads this should be surprised if they visit the website mentioned in the story to find an actual chatbot there, receiving orders.

If a user talks to the chatbot, they'll uncover some interesting pieces of information (plot beats):

I used Figma to brainstorm and drafted up three bot personalities: a friendly butler-type character to be the 'face' of the chatbot, a sinister demonic-type character to be the antagonist under the mask, and finally the benevolent 'good' chatbot to help the user.

I also used Figma to map my flows for the happy paths through the conversation.

I then used Excel to map these flows into intents, and added a lot of floating intents. I used Excel to keep track of all my intents because it was quite a big project: I had three different personalities, with different responses depending on the state of the conversation. I ended up with three distinct phases, or acts, each with its own set of unique intents....

I wanted the conversation to feel open but also realized that I needed a way to drive the story forwards. I decided on a three phase system, which would be triggered by hitting certain checkpoints. Each phase would open with a new event. These phases are:

Each phase required completely unique intents: for example, a user asking "Who are you?" will get a different response when asking this to the initial bot in the intro phase, compared to asking it to the demon in phase three.

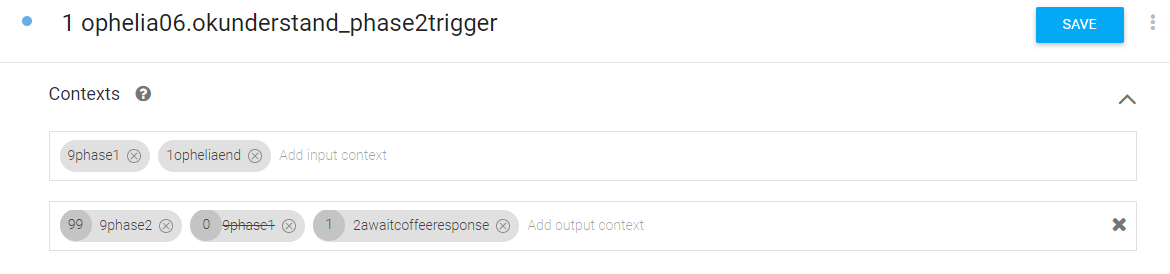

To achieve this, I used contexts on Dialogflow. All of the intents associated with the intro phase have an input context of 9phase1. Similarly, all of the phase two intents have an input context of 9phase2, and all of the intents in phase 3 have an input context of 9phase3.

When the intent that triggers the next phase is triggered, the bot assigns the relevant output context with a lifespan of 99 and resets the previous phase's context to zero. This is shown below:

I call this a checkpoint system, because once you hit these points the state of the conversation changes and the system 'knows' that you are in the next phase.

Because this project is a narrative project, as opposed to a UX project, there were a few design considerations I made that were not typical for chatbot design.

To put it simply, conversation design conventions are geared towards cultivating a smooth user experience—we want the user to feel like the situation is being controlled. However, a horror story wants the user to feel scared. Lack of control or agency drives tension in horror writing, which can be a useful tool. Making these two different schools of thought mesh was a challenge—I had to employ some unconventional design decisions for the sake of the narrative. Here are some examples:

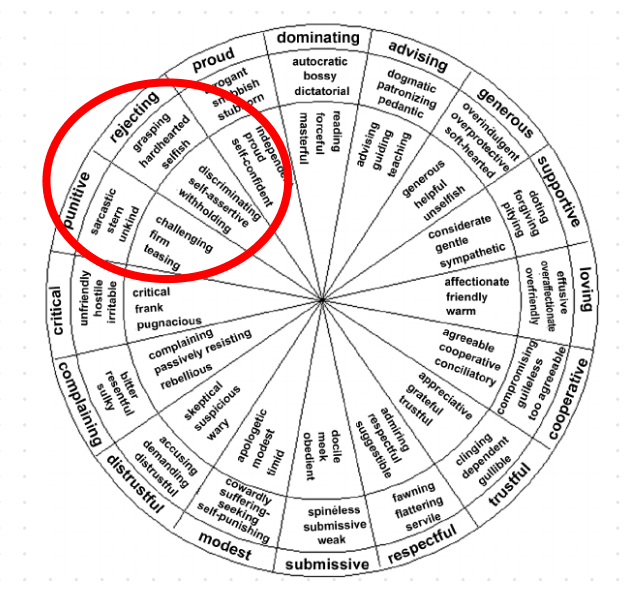

A bot persona should almost always veer to the right of Leary's Interpersonal Circumplex (a psychological model for conceptualizing dispositions). This means that most A.I. assistants are friendly, friendly-dominant, or friendly-submissive. However, my Oiphaia persona veers left—he is stern, harsh, unfriendly, and selfish. This makes sense for an antagonist in a horror story, but would be certainly unusual for a virtual assistant.

Chatbots, in my opinion, should almost always use quick replies/suggestions or buttons. Of course, it depends on the UI, but more often than not quick replies are invaluable as a tool to direct the flow of a conversation. For the Chalice Coffee bot, I wanted the story to feel immersive—a big design consideration was to avoid buttons where possible. I wanted the user to feel like they were exploring something real on their own terms, rather than being guided with what to say.

Of course, this meant that I had to find other ways to guide users along my happy path—I heavily used prompts/CTAs at the end of floating intents, to nudge users in the right direction. For example, in phase two a prompt will often end with "beware Oiphaia, he hungers" which may prompt the user to reply "Who is that??" (this will lead them on the path to phase three).

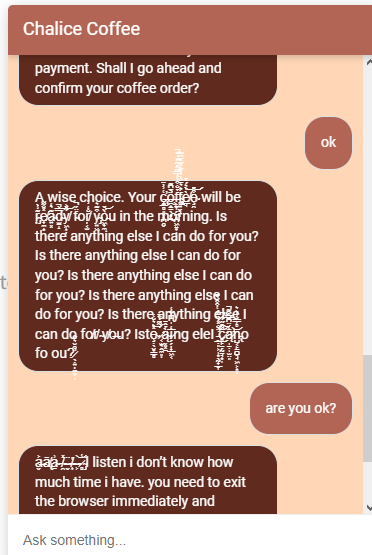

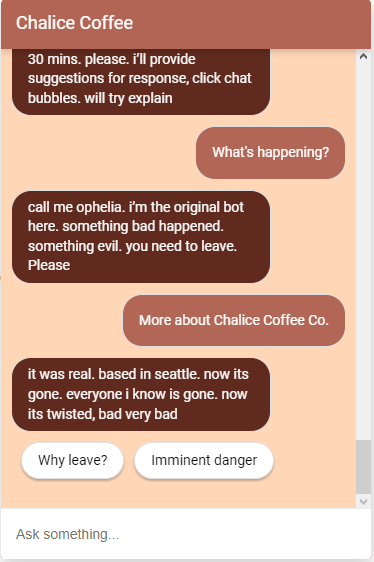

One interesting thing about omitting buttons—I actually used them for Ophelia, the helpful bot who would occasionally surface:

Buttons only appear when Ophelia is talking—they act as helpful, comforting guides to move the narrative forwards.

And when the act three transition occurs, the user's familiarity with Ophelia's buttons is used to deliver a narrative gut-punch—the buttons suddenly become corrupted whilst Ophelia pleads for help:

This marriage of conversation-based design and narrative-based design allows this moment to feel harrowing for the user, in a big climactic moment.

I would usually add copious amount of error handling throughout a conversation to help it feel more natural and smooth. In the case of an immersive horror story, however, I realized that I wanted the conversation to feel unnatural and rough. If the chatbot sometimes acted weirdly, or responded questionably, that would add to the experience and bizarreness of the situation.

Instead of a typical error handling solution, I therefore used a lot of floating intents to try and account for things the user may say (for example, negative responses, positive responses, etc.) The conversation has over 400 intents, spread across the three phases. The end result is a bot that can anticipate many responses, but will sometimes say some weird things spontaneously—which is great for the use-case of a horror story.

Working with an unconventional bot made me appreciate conversation design best-practices all the more. There are so many key decisions that lead to producing a friendly and smooth user experience. It was a weird and unique challenge to think of what I needed to do to ensure a profoundly unfriendly and horrifying user experience. I feel like my grasp of conversation design theory allowed me to pin-point exactly which conventions I had to stray from, which led to a stronger story.

As Picasso once said, "Learn the rules like a pro, so you can break them like an artist."

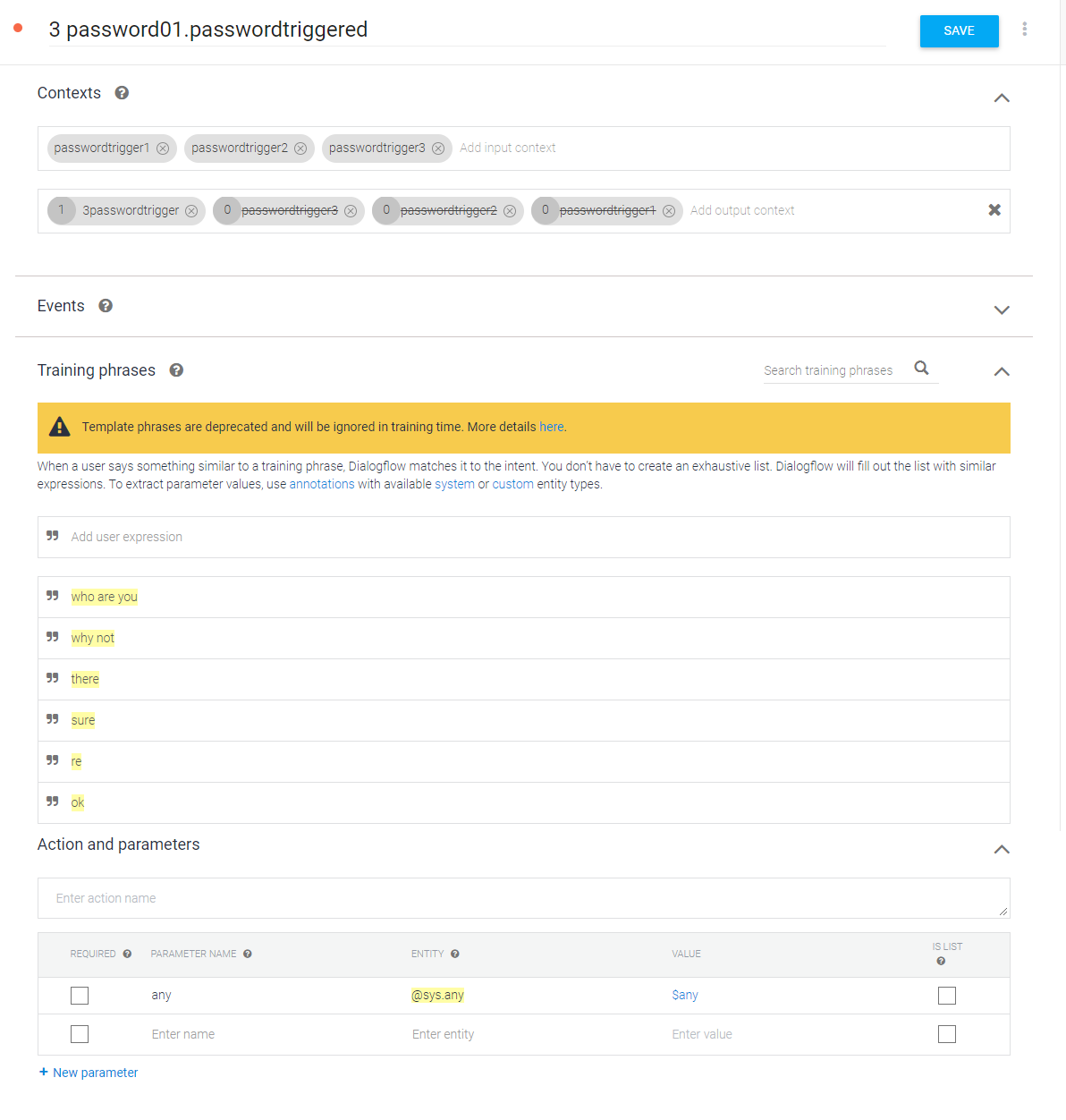

One little trick I use throughout the conversation is using Dialogflow intents to force a path.

For example, the final phase is meant to conclude with the user discovering 'the password.' I wanted to make this trigger automatically after some time spent in the phase (because there's no guarantee a user will ask for the password and trigger the intent).

To achieve this, I assigned output contexts to the rest of the intents in phase three: either passwordtrigger1, passwordtrigger2, or passwordtrigger3. These all have a long life-span of fifty.

Next, I had a password-giving intent with input contexts: passwordtrigger1, passwordtrigger2, and passwordtrigger3.

Finally, I used training phrases with the Dialogflow parameter @sysany. I used @sysany to highlight the entire user response during training. @sysany is a parameter that indicates that any response will suffice.

Therefore the following sequence would happen:

I used this technique multiple times throughout the conversation to trigger key events: this homogenizes user experiences and drives the narrative forwards in a subtle way.

I made a quick website on a free hosting service, and added my bot to the site by using the native Dialogflow Messenger integration. It was my first time using this particular integration, and I was pleased with the results. I was even able to change the colors of the chat box to fit the Chalice Coffee brand.

This was a fun but challenging task, particularly due to its unorthodox nature. I was pleased with how creepy the bot ended up being, and I received some great feedback. Throughout the course of this project I was able to reflect on why conversation design best-practices are so effective. I also learned more about the wonders of Dialogflow context manipulation.

Ultimately, I think there are a lot of avenues for immersive, conversational storytelling through chatbots. The field of conversation design is relatively young, and I feel like there's so much potential for unique, interesting, and creative bots. Narrative chatbots could work in other applications, too—the VR space and the video game space are two areas I can think of that would be very interesting for narrative based interactive fiction, driven by NLP.

With professional experience spanning three countries, I have confidence in my ability to adapt to any situation. As a life-long learner, I always strive to equip myself with new skills and knowledge to tackle whatever challenge may come.